Deep Learning Model using Transformer and Attention Mechanisms

Transformer-based text translation

Project Details

- Category: Natural Language Processing

- Language: Python (with TensorFlow)

- Date: August 2023

- GitHub: Project Repository

- Project Notebook (in French)

I developed a machine translation system that can translate Portuguese sentences into English. I designed the model's structure, trained it on batches of data, and integrated attention mechanisms to better understand the translation patterns. To evaluate my model's performance, I visualized attention weights for specific translations. Finally, I exported the trained model for deployment or further use in different applications. For this project, I followed the TensorFlow tutorials. To understant it better, I built this Transformer model architecture in Python and compared its performance with a simple seq2seq model enhanced with attention mechanisms.

Installation

When it comes to training deep learning models, especially those based on Transformers, computation can often be intensive. NVIDIA GPUs, with their parallel architecture, are well-suited for such tasks. Thus, checking the availability and specifications of an NVIDIA GPU is essential to ensure that the execution environment is optimized for training.

Libraries like TensorFlow often require additional datasets and tools to process text. tensorflow_datasets provides a variety of standardized datasets ready for use, greatly simplifying data preparation. On the other hand, tensorflow_text is a TensorFlow extension that facilitates text processing by providing tools and operations specific to this task.

Importing various standard Python modules facilitates data manipulation, interaction with the operating system, and error handling.

Download the Dataset

Theoretical Aspect

Datasets are the cornerstone of any machine learning project. In the context of machine translation, having aligned sentence pairs in the source and target languages is essential to train the model.

The 'ted_hrlr_translate/pt_to_en' dataset from TensorFlow Datasets (TFDS) comes from TED talk transcripts and provides sentence pairs in multiple languages. In particular, 'pt_to_en' refers to Portuguese to English translation pairs. These data are ideal for training a translation model as they contain real sentences from professional talks.

Practical Aspect

Text Tokenization and Detokenization

Theoretical Aspect

Tokenization is the process of converting a text string into a sequence of tokens (e.g., words, subwords, or characters). It is a crucial step in text processing for machine learning as models operate on numerical representations rather than raw text strings. Additionally, tokenization allows better handling of unknown or rare words by breaking them down into more frequent subunits. Detokenization is the inverse process: converting a sequence of tokens back into a text string.

Practical Aspect

Configuring the Input Pipeline

Theoretical Aspect

One of the most important aspects of training a deep learning model, especially with textual data, is the processing and preparation of the data. The above code establishes an input pipeline to handle this process.

Practical Aspect

- cache(): This function stores the elements of the dataset in memory, significantly speeding up subsequent steps.

- shuffle(BUFFER_SIZE): It shuffles the samples to prevent the model from always seeing the same sequences, which could create a bias.

- batch(BATCH_SIZE): It groups the samples into batches of size BATCH_SIZE.

- map(tokenize_pairs, num_parallel_calls=tf.data.AUTOTUNE): It applies the tokenization function to each element of the dataset.

- prefetch(tf.data.AUTOTUNE): It preloads the data to avoid waiting times during training.

Finally, batches for the training and validation datasets were created using the make_batches function.

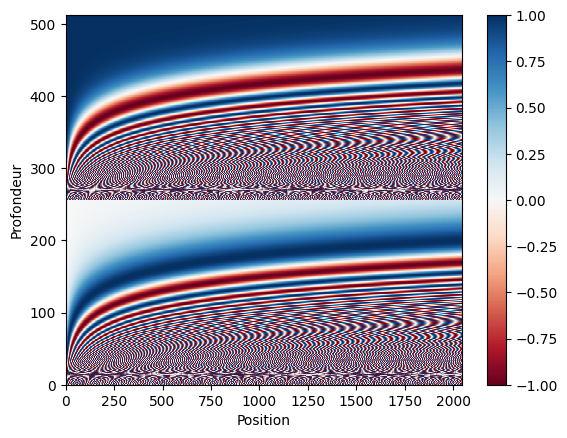

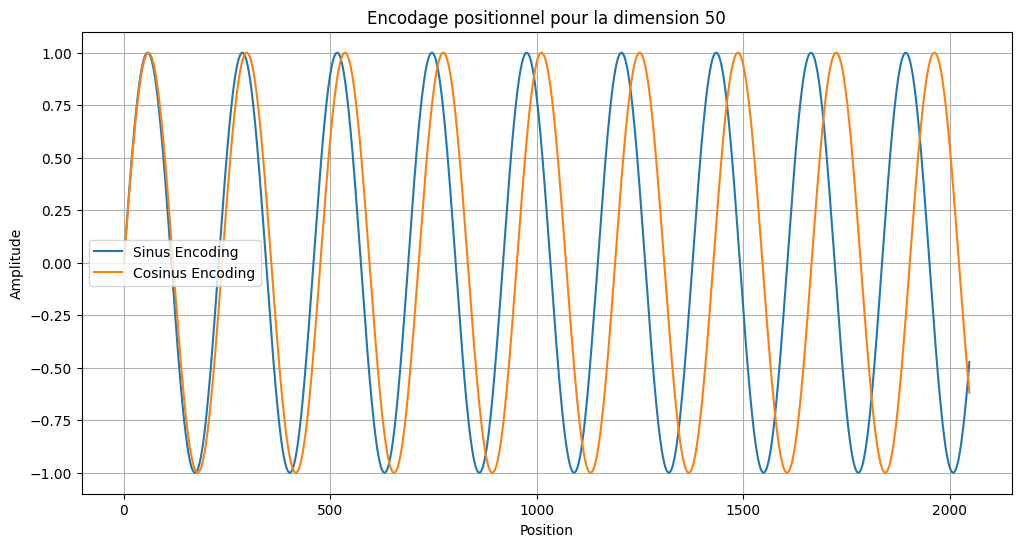

Positional Encoding

In sequence processing architectures, especially Transformers, the order of words is crucial. Positional encoding is a mechanism that allows models to take into account the order of words in a sequence, even when they don't have recurrent or convolutional mechanisms.

Theoretical Aspect

Practical Aspect

Ultimately, positional encoding is a powerful tool for incorporating positional information into models that would otherwise lack this capability. This allows models like Transformers to achieve impressive performance on sequence processing tasks.

Masking

The ability of a model to sequentially process information is essential, especially when predicting the next words or tokens in a sequence. This is where masking mechanisms come into play. They allow ignoring certain tokens or ensuring that when predicting a token at a certain time step, only the previous (or valid) information is used.

Theoretical Aspect

There are different types of masks:

Practical Aspect

Examples: I provided examples to demonstrate how these functions create masks. For instance, the padding mask for a sequence with zeros will show where the paddings are, and the look-ahead mask for a sequence will show a triangular shape, masking all tokens after the current token.

In summary, masking is crucial to ensure that models process data appropriately, ignoring paddings and ensuring that during prediction, only valid information is used.

Scaled Dot-Product Attention

Theoretical Aspect

Scaled Dot-Product Attention is a mechanism that allows a model to focus on different levels of attention on different parts of a sequence. It is the key ingredient behind the Transformer architecture, which has revolutionized natural language processing tasks.

Practical Aspect

1. Calculates the dot product between the query q and the key k.

2. Scales this value with \( \sqrt{d_k} \), where \( d_k \) is the dimension of the key.

3. If a mask is provided, it adds it (usually used to mask padding values or for look-ahead masking).

4. Applies the softmax function to obtain attention weights.

5. Multiplies the attention weights by the value v to obtain the final result.

Usage Examples: Multiple examples of queries, keys, and values are provided to show how the attention mechanism works. Each example illustrates how different queries allocate different attention to the set of keys.

In summary, Scaled Dot-Product Attention is a mechanism that allows a model to weigh the importance of different parts of a sequence when making decisions. These attention weights can be interpreted as the measure of how much the model "pays attention" to different parts of the input.

Multi-Head Attention

Theoretical Aspect

Multi-Head Attention is a technique to enhance the attention mechanism's ability to focus on different parts of a sequence simultaneously. Instead of having a single set of attention weights, multi-head attention uses multiple "heads" to capture different types of relational information.

Why "Multi-Head"? Each attention "head" could potentially learn to focus on different aspects of the data. By combining the results from multiple heads, we obtain a representation that captures diverse relationships in the data.

Practical Aspect

The MultiHeadAttention class is a custom Keras layer that implements multi-head attention.

- num_heads: The number of heads for multi-head attention.

- dff: The dimension of the hidden layer. This is the dimension after the first dense layer before reduction to d_model by the second layer.

- Second Layer: A dense layer that reduces the dimension to d_model.

Example of Usage: The code creates an example of an encoder layer with a model dimension of 512, eight attention heads, and a hidden layer dimension of 2048. It then passes a tensor of shape (64, 43, 512) through this encoder layer and displays the shape of the output. In summary, the encoder layer is a crucial component of the "Transformer" architecture and is typically stacked multiple times to form the complete encoder in the "Transformer" model. It plays a crucial role in transforming input representations using multi-head attention and the point-wise feed-forward network.

Decoder Layer

Theoretical Aspect

In the Transformer architecture, each decoder layer consists of three major sub-layers: two multi-head attention blocks and a point-wise feed-forward network.

The first multi-head attention block functions similar to the encoder, with the specific addition of a mechanism to prevent attending to future positions (achieved by the "look-ahead mask").

The second multi-head attention block is used for the decoder to focus its attention on relevant outputs from the encoder. In other words, the decoder receives the encoder's outputs and tries to associate them with its own input to better infer the next output.

The point-wise feed-forward network functions similarly to the encoder.

Practical Aspect

The class DecoderLayer defines a decoder layer.

- num_heads: The number of heads for multi-head attention.

- dff: The dimension of the hidden layer in the point-wise feed-forward network.

- rate: The dropout rate.

- ffn: The point-wise feed-forward network.

- layernorm1, layernorm2, and layernorm3: Layer normalizations.

- dropout1, dropout2, and dropout3: Dropout layers.

Example of Use: The code creates an example of a decoder layer with a model dimension of 512, eight heads for multi-head attention, and a hidden layer dimension of 2048. It then processes an input tensor of shape (64, 50, 512) through this decoder layer and displays the shape of the output.

In summary, the decoder layer is another essential component of the Transformer architecture. It progressively generates the target sequence by attending to its own previous output and the encoder's output simultaneously. These combined pieces of information enable it to generate the next element of the target sequence.

Encoder

Theoretical Aspect

The encoder in the Transformer architecture takes an input sequence, transforms it into embeddings, adds positional information, and passes this combined representation through a stack of encoder layers.

Each encoder layer mainly contains two sub-layers: a multi-head attention and a point-wise feed-forward network. The outputs of each layer are processed with layer normalization and dropout to avoid overfitting. The output of a layer is added to its input (residual connection) before passing to the next layer.

Practical Aspect

Let's take a look at the class Encoder.

- encoder: Initializes the encoder.

- decoder: Initializes the decoder.

- final_layer: A dense layer that converts the decoder's output into predictions of size target_vocab_size.

Example of Use: An example Transformer is created with given parameters and then tested with randomly generated input and target sequences. The shape of the output is then displayed. In summary, the Transformer is a powerful and flexible architecture for sequence processing, particularly suitable for tasks like machine translation, but it is also used in various other natural language processing tasks.

Decoder

Theoretical Aspect

The decoder in the Transformer architecture is responsible for taking the output of the encoder and producing a target sequence. Like the encoder, the decoder is composed of stacked layers. However, each decoder layer has three main sub-layers: two multi-head attention layers (one used for attention with respect to the encoder's outputs and the other for attention with respect to the previous layer's decoder output) and a point-wise feed-forward network.

Practical Aspect

The class Decoder defines, obviously, the decoder.

- d_model: The output dimension.

- num_heads: The number of heads for multi-head attention.

- dff: The dimension of the hidden layer in the point-wise feed-forward network.

- target_vocab_size: Size of the target vocabulary.

- maximum_position_encoding: Maximum dimension for positional encoding.

- rate: The dropout rate.

Example of Use: A decoder example is created with two layers, a model dimension of 512, eight heads for multi-head attention, a hidden layer dimension of 2048, a target vocabulary size of 8000, and a maximum positional encoding of 5000. It then processes an input tensor through this decoder and displays the shape of the output as well as the attention weights. In short, the decoder progressively generates the target sequence by attending to its own previous output and the encoder's output simultaneously. These combined pieces of information enable it to generate the next element of the target sequence.

Creating the Transformer

Theoretical Aspect

The Transformer is a sequence processing architecture that uses attention mechanisms to better capture contextual relationships at different spans in a sequence. Unlike RNN and LSTM architectures, the Transformer does not rely on sequential computations, making it more parallelizable and potentially faster. The architecture is composed of two main components: an encoder and a decoder.

Practical Aspect

Let's take a look at the class Transformer.

- encoder: Initializes the encoder.

- decoder: Initializes the decoder.

- final_layer: A dense layer that converts the decoder's output into predictions of size target_vocab_size.

Example of Use: A sample Transformer is created with given parameters and then tested with randomly generated input and target sequences. The shape of the output is then displayed. In summary, the Transformer is a powerful and flexible architecture for sequence processing, particularly suitable for tasks like machine translation, but it is also used in various other natural language processing tasks.

Defining Hyperparameters

Theoretical Aspect

Hyperparameters are parameters that are not learned during the training of a model, but are defined in advance. They influence the performance, capacity, and behavior of a model. Here is a brief description of the provided hyperparameters:

- num_layers: Number of layers in the encoder and decoder. This determines the depth of the model. The more layers there are, the more the model can potentially learn complex representations, but it also increases the risk of overfitting and training time.

- d_model: Model dimension, i.e., the size of embeddings at each layer. A higher dimension allows the model to represent more complex data, but it can also increase the risk of overfitting.

- dff: Dimension of the intermediate layer in the feed-forward network. This influences the network's capacity to learn complex transformations between layers.

- num_heads: Number of heads in the multi-head attention. Multi-head attention allows the model to focus on different parts of a sequence simultaneously, which can improve performance for certain tasks.

- dropout_rate: Probability of dropping a unit during training. Dropout is a regularization technique that can help prevent overfitting.

Practical Aspect

These hyperparameters are generally determined through a combination of experience, domain knowledge, and experimentation. Sometimes, hyperparameter search techniques (such as grid search or Bayesian search) are used to find the best combination for a given task.

In the given example:

- We use 4 layers for both the encoder and decoder.

- The embedding dimension is 128.

- The intermediate feed-forward layer has a dimension of 512.

- Multi-head attention is configured with 8 heads.

- The dropout rate is 0.1, meaning that during training, each unit (neuron) has a 10% probability of being dropped at each model update.

In general, the configuration of these hyperparameters can have a significant impact on the model's performance, so it is important to choose them wisely.

Optimizer

Theoretical Aspect

The optimizer is the algorithm used to update a model's parameters in response to the error it produces during training. The Adam optimizer is a variant of the stochastic gradient descent algorithm that calculates adaptive learning rates for each parameter. In the Transformer, a commonly used technique is to adjust the learning rate during training using a special learning rate schedule. This schedule increases the learning rate linearly for the first `warmup_steps` steps and then decreases it proportionally to the inverse square root of the step.

Practical Aspect

The `CustomSchedule` class defines this learning rate schedule. It takes into account the model dimension `d_model` and the number of warm-up steps `warmup_steps`. When instantiating `CustomSchedule`, you can specify `d_model` and `warmup_steps` (which has a default value of 4000).

The Adam optimizer is then initialized with this custom learning rate. The `beta_1`, `beta_2`, and `epsilon` parameters are typical hyperparameters of the Adam optimizer. The visualization at the end shows how the learning rate evolves with the training steps. At the beginning, the learning rate increases, but after a certain number of steps (`warmup_steps`), it starts to decrease.

This special approach to the learning rate was used in the original Transformer paper and is thought to offer better performance, especially in natural language processing tasks such as translation. However, like all hyperparameters and training techniques, the relevance and efficacy may vary depending on the specific task and data to which the model is applied.

Loss and Metrics

Theoretical Aspect

The loss is a measure that quantifies how much the model's predictions deviate from the true values. The loss function chosen here is `SparseCategoricalCrossentropy`, which is commonly used for classification tasks where the classes are mutually exclusive.

The accuracy is a metric that quantifies the percentage of correct predictions relative to all predictions.

Practical Aspect

The `loss_object` is an instance of `SparseCategoricalCrossentropy`. It calculates the categorical cross-entropy loss between the actual labels and the predictions. The `from_logits=True` argument means that the function expects the predictions to be unnormalized logits rather than normalized probabilities.

The `loss_function` is a custom version of the loss function that takes into account padding sequences (zero values). Padding is used to make all sequences have the same length during training, but we do not want to include these padding values in the loss calculation. For this purpose, a mask is created to identify these padding values and exclude them from the loss calculation.

Similarly, the `accuracy_function` calculates accuracy while excluding padding sequences from the calculation.

`train_loss` and `train_accuracy` are metrics that will track and average the loss and accuracy, respectively, during training. They are initialized with `tf.keras.metrics.Mean` to accumulate values and compute their mean over time.

In the context of training natural language processing models, considering these padding sequences is essential to obtain accurate evaluations of the model's performance. These custom functions ensure that the model is evaluated only on the relevant elements of the sequence and not on the added padding.

Training and Control

Theoretical Aspect

The training (or fitting) of a machine learning model involves iteratively adjusting its parameters to minimize a cost or loss function. The control (or checkpointing) allows saving the model's parameters at different stages of training, making it easier to resume training, validate the model, or deploy it in future applications.

Practical Aspect

- Calculates predictions and loss using a gradient tape.

- Updates the model's parameters using the optimizer.

This code encapsulates the typical logic for training and controlling a deep learning model. It illustrates the essential steps to train a complex model like the Transformer while ensuring that progress is not lost thanks to regular checkpointing.

Running Inference

Theoretical Aspect

Inference refers to the process of using an already trained model to make predictions on new unseen data. In this context, inference concerns translating sentences from one language to another.

Practical Aspect

Example: Finally, an example of translation is provided. A sentence is translated, and the result is displayed. This example demonstrates how to use a trained Transformer model to translate sentences from Portuguese to English. The structure allows for easy integration of other sentences or modification of the translation logic if needed.

Attention Plots

Theoretical Aspect

The attention mechanism is a key innovation in the field of natural language processing. It allows a model, such as the Transformer, to "focus" on different parts of an input sequence when producing an output sequence. Attention plots visualize these weights, giving an idea of which part of the input the model considers most relevant at each step of the output sequence generation.

Practical Aspect

This example illustrates the importance of visualizing attention weights to understand the internal workings of a Transformer model. By visualizing the weights, insights can be gained into the model's attention areas and potentially improve its performance or architecture.